From Algorithmic Arbiters to Stochastic Stewards: Deconstructing the Mechanisms of Ethical Reasoning Implementation in Contemporary AI Applications

Abstract

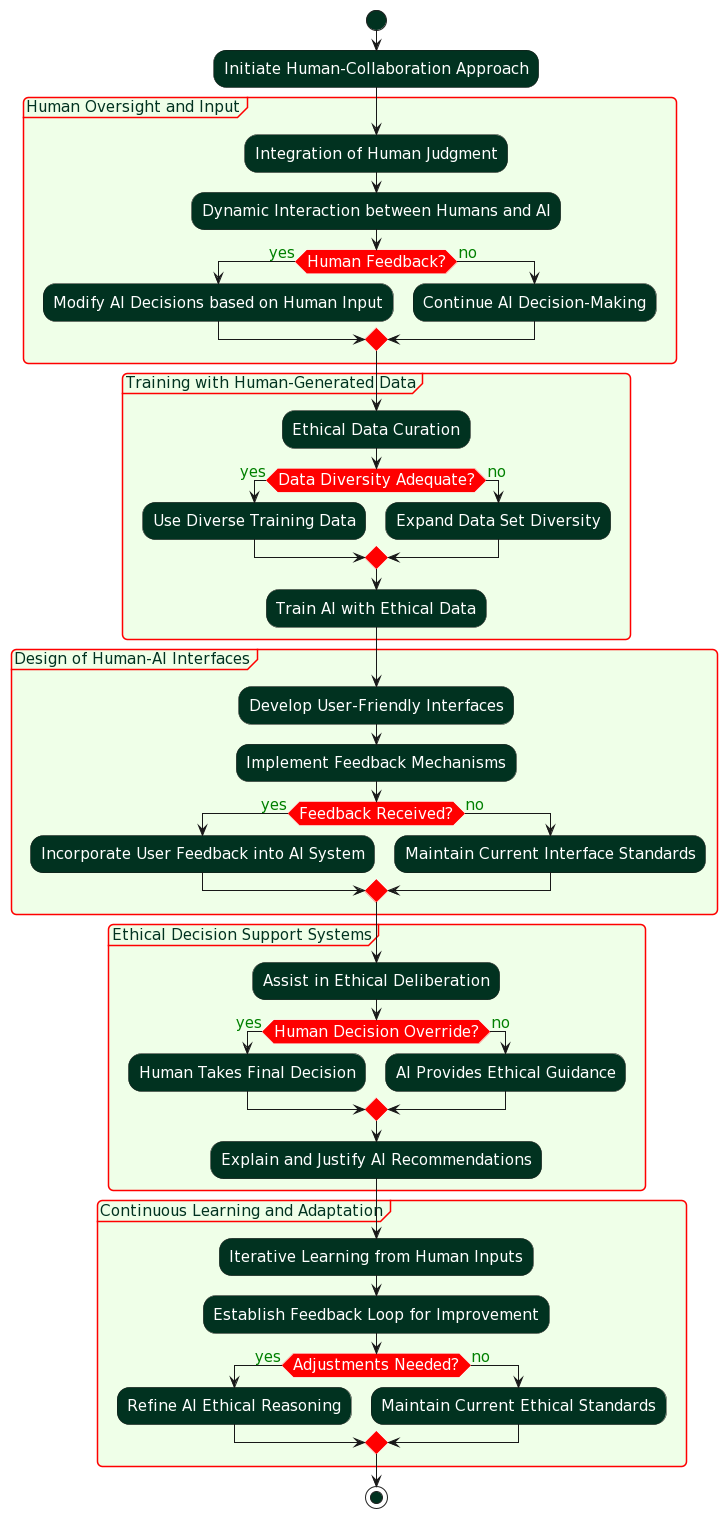

As AI continues to permeate diverse sectors—from healthcare and finance to autonomous vehicles and personal assistants—the decisions made by these systems increasingly impact human lives and societal norms. Consequently, ensuring that AI operates within ethical boundaries is not merely a theoretical concern but a practical urgency. This research presents an analysis of four primary strategies in developing ethical reasoning within artificial intelligence (AI) systems: Algorithmic, Human-Collaboration, Regulation, and Random approaches. The primary objective is to explain the implementations of these strategies in contemporary AI systems, highlighting their methodologies, challenges, and practical implications. The Algorithmic Approach is built on the assumption that ethical guidelines can be algorithmically encoded. This includes selecting an appropriate ethical framework, translating ethics into quantifiable metrics, and designing algorithms capable of processing these metrics while ensuring fairness and impartiality. It involves data collection, model training, real-world testing, iterative improvement, and adaptation to evolving ethical norms. Conversely, the Human-Collaboration Approach emphasizes the integration of human judgment with AI capabilities. Critical to this approach is the development of user-friendly interfaces for effective human-AI interaction, ethical data curation from diverse human perspectives, and the establishment of AI systems as ethical decision support tools. This approach necessitates continuous learning from human input. The Regulation Approach focuses on the establishment of external guidelines and standards by authoritative bodies to ensure ethical AI operation. It includes the development of regulatory frameworks, legislation, compliance mechanisms, ethical impact assessments, stakeholder involvement, and international collaboration. This approach aims to balance global AI technology standards with local cultural and ethical norms. The Random Approach introduces elements of randomness into AI decision-making processes to mitigate systematic biases and promote diverse outcomes. It is an exploratory strategy which involves balancing randomness with rationality, assessing ethical implications, and managing risks associated with unpredictability.

Keywords

Algorithmic Approach, Ethical Reasoning, Human-Collaboration, Random Approach, Regulation Approach, Technological Ethics, Unpredictability